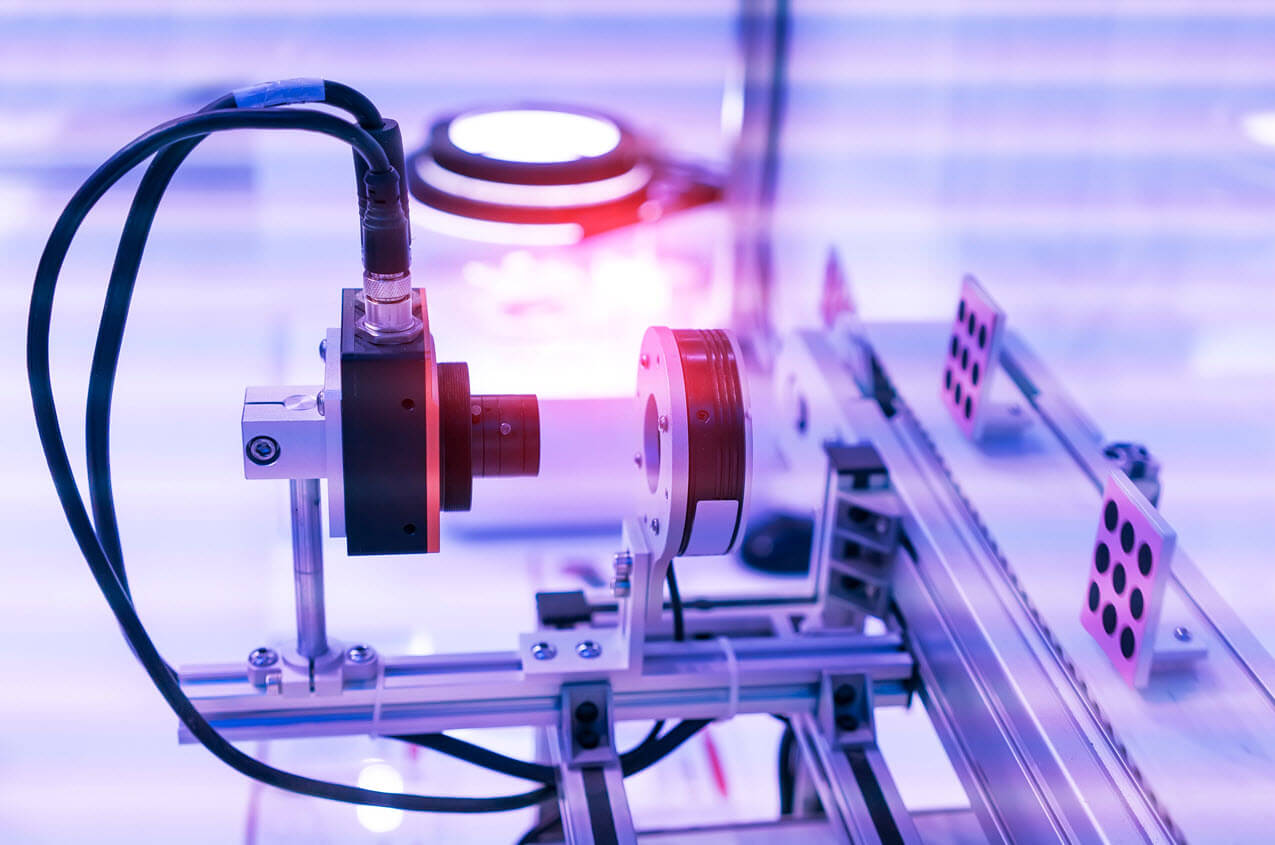

As part of our end-to-end solutions, we provide expert advice and tailored design and implementation of the system components needed to obtain the ideal images to achieve customers' specific goals. APIXA's optical system design covers the integration of sensors, optics and illuminations as well as consideration of the mechanical, electrical and environmental requirements of a new or existing installation. We are experienced in working within constraints of high speed, limited space, or harsh environments to deliver robust end-use systems and consistent images.

The end goal of a carefully-designed imaging platform is to reliably provide image data that clearly highlight the features that are of concern to our customers. These data, in turn, feed our software components for detection, classification, and other vision-related tasks. APIXA ensures making optimal use of computer vision in favor of creating maximum added value. Our expertise is in achieving this goal while meeting the speed, reliability and cost requirements critical to success in any industry.

A broad application range across markets

Next to a series of common optical system applications, APIXA develops systems which optimally benefit from specialized application cases. The typical use case for line scan applications is the inspection of products on a conveyor belt. The setup often contains focused line lights operating at the ideal angle and working distance while the line scan camera is triggered by an encoder built into the conveyor belt. Microscope images typically will be used for quality inspection of IC components or medical tests. The accompanying illumination and optics need to be carefully considered to show fine details and avoid or exploit diffractive effects.

For optical systems used in rail inspection, robustness is critical because various challenging aspects need to be faced: high speed, vibrations, electrical interferences, weather concerns, etc. In hyperspectral classification, halogen or specialized LED illumination is needed to cover a wide spectrum while environmental light and reflections should be limited. Multiple hyperspectral cameras can be used to increase the spectral range.

From custom integrations to commercial products

Designing an optical system that delivers the right image data in one thing and integrating the system in a customer's existing installations is another. APIXA has proven to be successful in combining both. Our specialists are able to work with existing production lines and infrastructure to minimize disruptions necessitating expensive modifications. Overall, we iterate design from an initial pilot study using our in-house labs, to a prototype on a customer site, and eventually to a scalable, production-ready integrated system. In a number of cases, our system is part of a packaged product commercialized by our customers.

The typical stages in our system design process

Our structured approach ensures high quality and productivity along the process from initial discussions to the final product in full operation at the customer:

- In-house feasibility study: In this stage, we will create a small scale test setup to image customer samples. This step helps us determine hardware specifications and provides initial examples and validation for algorithmic solutions.

- Pilot/prototype phase: Here we create an initial system that can create images representative of an end-use system. This helps us identify any issues, provides a test bed for software development, and can be used for generating training data if required for a given project.

- Final system: We deliver and install the end-use system and provide user training. Attention is paid to reliability and robustness, as well as usability for non-technical end users.

APIXA has in-depth experience in image acquisition hardware and continuously follows the latest trends in this regard. By identifying the optimal combination of hardware components, the acquired images will clearly highlight the features that matter most to our customers.

Cameras/sensors

Area scan sensors provide a familiar 2D image from a single capture. Line scan sensors capture a single image row at a time. These are useful in cases where an object is moving through the camera's field of view, so lines are rapidly captured and combined, creating a continuous 2D image.

The preferred spectral selection depends on the application at hand, and can lead to an RGB, monochromatic or hyperspectral sensor:

- RGB sensors are widespread and produce human-interpretable color images. These sensors allow for ready use of premade training data or reliable custom user-annotated image sets.

- Monochromatic are the simplest, most sensitive sensors. These are used either unfiltered, for maximum sensitivity, or filtered, to reveal specific spectral bands.

- Hyperspectral sensors are used when information utilizing a continuous spectral range are needed. Hyperspectral cameras capture hundreds of spectral bands at every pixel location.

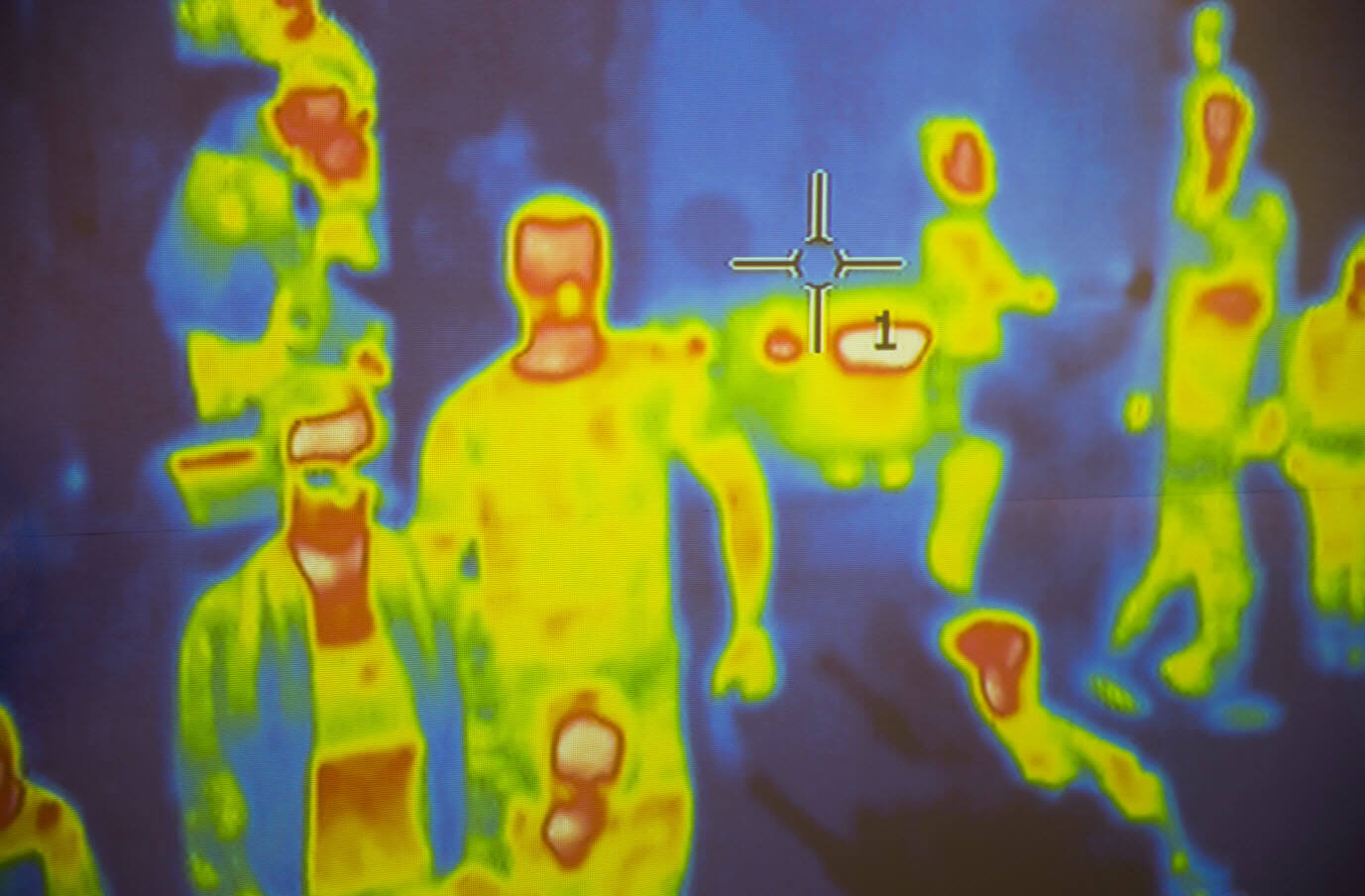

In special cases, more exotic sensors can be employed to capture critical application-specific specific aspects:

- NIR, SWIR, MWIR, thermal, etc.

- X-ray images

Obviously, other optical system aspects - such as resolution, sensitivity, interfaces and triggering - play an important role and need to be taken into account in context of the complete optical system.

Lenses and filters

When selecting lenses and filters, we basically select the optimal optical components for a given problem. Concretely, we take into account constraints on viewing angle, spectrum, and light-gathering capacity. In special cases, we can opt for an omnidirectional (fish-eye) lens, which allows a single camera to see features over a 180 degrees field. This lens type is well-suited in simultaneous localization and mapping (SLAM) contexts. Conversely, a telecentric lens may be used to remove perspective effects from an image, allowing for precise measurement of objects at different distances. For the lens filters, there are also several options possible: polarizing, bandpass, ND, etc.

Illuminations

Providing the right illumination is a critical consideration in revealing subtle details and capturing clear images at high speeds.

- Strobing: These provide a short burst of bright light to provide a still image of a moving object. This has advantages beyond a typical camera flash; taking separate images in rapid succession with and without a strobe, background light can be removed. Similarly, multiple strobes can be sequenced to create multiple images with different lighting angles.

- Infrared: These are useful when visible light would be distracting to users or when certain infrared spectral ranges reveal specific properties of target objects.

- Line lights: These are the typical choice for line-scan applications and can focus intense light directly on the imaging area.

- Laser: In extremely high-speed applications, a line light may not be sufficient to fully expose an image. In these cases, we can also deploy laser line sources. This introduces specific optical and safety considerations that we take into account.

- Dark field and oblique: these technologies are useful when imaging details related to relief, e.g., scratches, fabric direction, or dust.

System setup and integration

All constituting optical setup elements - cameras, lights and other hardware - frequently need to be carefully positioned, yet solidly fixed. We can design the necessary frames, brackets and other hardware necessary to integrate our systems into a customer site. We apply environmental enclosures, both off-the-shelf and custom solutions, to place cameras, sensors, and lighting in challenging environments.

In terms of electrical and data communication, we select appropriate solutions for power, signaling and high-speed data pathways necessary for a successful machine vision system.

Sensor fusion

Sensor fusion is the process of combining sensory data or data derived from different types of sensors such that the resulting information is more useful than would be possible when these sources were used individually, as some examples below illustrate.

- Encoder/PLC integration: communication and triggering using existing signals or specialized sensors; encoders are frequently used in combination with line-scan cameras to take line images after moving a certain distance, creating interpretable 2D images

- Laser depth sensors and cameras: laser distance sensors for triggering cameras on objects at specific distances, ensuring repeatability and precision; lidar provides 1D or 2D point clouds that can be fused with camera images for refined depth maps with high-quality textures.

- Multiple cameras: whether imaging multiple angles, spectral ranges, or times, combining several camera sources is frequently needed to obtain a complete measurement of a target's state. This can introduce challenges in timing, positioning, and lighting that require precise solutions to overcome.